1. Introduction

The last six months have been very busy on the Artificial Intelligence front. The National Academy of Technologies of France (NATF) has just issued a short position paper to discuss some of the aspects of LLM (large language models) and conversational agents such as ChatGPT. Although much has happened very recently, reading the yearly report from Stanford, the 2023 AI index report, is a good way to reflect on the constant stream of AI innovations. While some fields show stabilization of the achieved performance level, others, such as visual reasoning, have kept progressing over the last few years (now better than human performance level on VQA challenge). The report points out the rise of multi-modal reasoning systems. Another trend that has grown from some time is the use of AI to improve AI algorithms, such as PaLM being used by Google to improve itself. This report is also a great tool to evaluate the constant progress of the underlying technology (look at the page about GPU, which have shown “constant exponential progress” over the past 20 years) or the relative position of countries on AI research and value generation.

It is really interesting to compare this “2023 AI Index Report” with the previous edition of 2022. In a previous post last year, the importance of transformers / attention-based training was already visible, but the yet-to-come explosive success of really large LLMs coupled with reinforcement learning was nowhere in sight. In a similar way, it is interesting to re-read the synthesis of “Architects of Intelligence – The Truth about AI from the People Building It”, since the bulk of this summary still stands but the warnings about the impossibility to forecast have proven to be “right on target”.

Despite what the press coverage portrays, AI is neither a single technology nor a unique tool. It is a family of techniques, embedded in a toolbox of algorithms, components and solutions. When making the shortcut of talking about AI as a single technique, it is almost inevitable to get it wrong about what AI can or cannot dot. In the 2017 NATF report (see figure below) we summarize the toolbox by separating along two axes: do you have a precise/closed question to solve (classification, optimization) or an open problem? do you have lots of tagged data from previous problems or not. The point being that “one size does not fit all”, lots of techniques have different purposes. For instance, the most relevant techniques for forecasting are based on using very large volumes of past data and correlations. However, in the past few “extra VUCA years” of COVID, supply chain crises and wars, forecasting based on correlation does not do so well. However, causality AI and simulation have a lot to offer in this new world. As noticed by Judea Pearl or Yann Le Cun, one of the most exciting frontiers for AI is world modeling and counterfactual reasoning (which is why “digital twins” are such an interesting concept). It is important to notice that exponential progress fueled by Moore’s Law happens everywhere. Clearly, on the map below, deep learning is the field that has seen the most spectacular progress in the past 20 years. However, what can be done with agent communities to simulate large cities, or what you can expect from the more classical statistical machine learning algorithms, has also changed a lot compared to what was feasible 10 years ago. The following figure is an updated version of the NATF figure, reflecting the arrival of “really large and uniquely capable” LLMs into the AI toolbox. The idea of LLM, as recalled by Geoffrey Hinton, is pretty old, but many breakthroughs have occurred that makes LLMs a central component of the 2023 AI toolbox. As said by Satya Nadella: « thanks to LLM, natural language becomes the natural interface to perform most sequences of tasks ».

Figure 1: The revised vision of the NATF 2017 Toolbox

This blog post is organized as follows. Section 2 looks as generative AI in general and LLM in particular, as a major on-going breakthrough. Considering what has happened in the few past months, this section is different from what I would have written in January, and will probably become obsolete soon. However, six months past the explosive introduction of ChatGPT, it makes sense to draw a few observations. Section 3 takes a fresh look at the “System of Systems” hypothesis, namely that we need to combine various forms of AIs (components in the toolbox and in the form of “combining meta-heuristics”) to deliver truly intelligent/remarkable systems. Whenever a new breakthrough appears, it gets confused with “the AI technology”, the approach that will subsume all others and … will soon become AGI. Section 4 looks at the AI toolbox from an ecosystem perspective, trying to assess how to leverage the strengths of the outside world without losing your competitive advantage in the process. The world of AI is moving so fast that the principles of “Exponential Organizations” hold more true than ever : there are more smart people outside than inside your company, you cannot afford to build exponential tech (only) on yourself, you must organize to benefit from the constant flow of technology innovation, and so on. There are implicitly two tough questions to answer: (1) how do you organize yourself to benefits from the constant progress of the AI toolbox (and 2023 is clearly the perfect year to ask this question)? (2) how do you do this while keeping your IP and your proprietary knowledge about your processes, considering how good AI has become to reverse-engineer practices from data?

2. Large Languages Models and Generative AI

Although LLM have been around for a while, four breakthroughs have happened recently, which (among other things) explain why the generative AI revolution is happening in 2023:

- The first breakthrough is the transformer neural network architecture, which started (as explained in last year’s post) with the famous 2017 article “Attention is all you need”. The breakthrough is simplicity: training a RNN (recurrent neural network) that operates on a sequence of input (speech, text, video) has been an active but difficult field for many years. The idea of “attention” is to encode/compress what a RNN must carry from the analysis of the past section of a sequence to interpret the next token. Here simplicity means scale: a transformer network can be grown to very large sizes because it is easier to train (more modular, in a way) than previous RNN architectures.

- The second breakthrough is the emergence of knowledge “compression” when the size grows over a few thresholds (over 5 and then 50 billion parameters). The NATF has the pleasure of interviewing Thomas Wolf, a leader of the team that developed BLOOM, and he told us about this “emergence” : you start to observe a behavior of a different nature when the size grows (and if this sounds vague, it is because it is precisely hard to characterize). Similar observations may be found while listening to Geoffrey Hinton or Sam Altman on the Lex Fridman podcast. The fun fact is that we still do not understand why this happens, but the emergence of this knowledge compression created the concept of prompt engineering since the LLM is able to do much more than stochastic reconstruction. So, beware of anyone who would tell you that generative AI is nothing more than a “stochastic parrot” (It is too easy to belittle what you do not understand).

- As these LLMs are trained on very large corpus of generic texts, you would expect to have to retrain them on domain specific data to get precise answers relevant to your field. The third breakthrough (still not really explained) is that some form of transfer learning occurs and that the LLM, using your domain specific knowledge as its input, is able to combine its general learning with your domain into a relevant answer. This is especially spectacular when using a code generation tool such as GitHub co-pilot. From experience, because my visual studio plugin uses the open files as the context, GitHub copilot generates code that is amazingly customized to my style and my on-going project. This also explains why the length of the context (32k tokens with GPT4 today) is such an important parameter. We should get ready for 1M token contexts that have been already discussed, which supports giving a full book as part of your prompt.

- The last breakthrough is the very fast improvement of RLFH (reinforcement learning with human feedback), which has itself been accelerated by the incredible success of ChatGPT adoption rate. As told in the MIT review article, the growth of ChatGPT user base came as a surprise to the ChatGPT team itself. Transforming a LLM into a capable conversational agent is (still) not an easy task, and although powerful LLMs were already in use in many research labs as of two years ago, the major contribution of OpenAI is to have successfully curated a complete process (from fine tuning, training the reward model through RLHF to guide the LLM to produce more relevant outputs to inner prompt engineering, such as Chain-Of-Thought prompting which is very effective in GPT4).

It is probably too early to stand back and see all what we can learn from the past few months. However, this is a great illustration of many digital innovations principles that have been illustrated in this blog posts of the past 10 years, such as the importance of engineering (thinking is great but doing is what matters), the emergence mindset (as Kevin Kelly said over 20 years ago, “intelligent systems are grown, not designed”) and the absolute necessity of an experimentation mindset (together with the means to execute, since as was beautifully explained by Kai-Fu Lee in his book “AI superpowers”, “size matters in AI development”). This is a key point, even though the nature of engineering is then to learn to scale down, that is to reproduce with less effort what you found with a large-scale experiment. The massive development of open-source LLMs, thanks to the LLaMA code released by Meta and optimization techniques such as LoRA (Low-Rank Adaptation of LLMs) gives a perfect example of this trend. A lot of debates about this topic was generated by the article leaked by Luke Sernau from Google. Although the commoditization of LLM is undeniable, and the performance gap between large and super-large LLMs is closing, there is still a size advantage for growing market-capable conversational agents. Also, as told by Sam Altman, there is a “secret sauce” for ChatGPT, which is “made of hundreds of things” … and lots of accumulated experience on RLHF tuning. If you still have doubts about GPT4 can do, I strongly recommend listening to Sebastien Bubeck. From all the multiple “human intelligence assessments" performed with GPT4 during the past few week, it is clear (to me) that LLMs work beautifully even though we do not fully understand why. As will become clear while reading the rest of the post, I do not completely agree with Luke Sernau’s article (size matters, there is a secret sauce), but I recognize two key ideas : dissemination will happen (it is more likely that we shall have many kind of LLMs of various kinds that a few large general-purpose ones) and size is not all that matters. For instance, DeepMind with its ChinChilla LLM focuses on a “smaller LMM” (70B parameters) that may be trained on a much larger corpus. Smaller LLMs outperform larger one in some contexts because they are easier to train, which is what happened with a Meta’s LLaMA (open sourced) comparison with GPT3. Another trend that is favoring the “distributed/specialized” vision is the path the Google is taking, with multiple “flavors” of its LaMDA (LLM for Dialog Application) and PaLM (Pathways LLM) that is specialized into Med-PaLM, Sec-PaLM and others.

For many reasons, labelling LLMs as “today’s artificial intelligence” is somehow misleading but is it certainly a new exciting form of “Artificial Knowledge”. There are many known limits to LLMs, that are somehow softened with RLHF and prompt engineering, but nevertheless strong enough to keep in mind at all time. First, hallucinations (when the LLM does not have the proper knowledge embedded in its original training set, it finds the most likely match which is not only wrong but very often plausible, hence confusing) mean that you need to be in control of the output (unless you are looking for random output). Hallucinations are tricky because, by construction, when the knowledge does not exist in the training corpus, the LLM “invents” the most plausible completion, which is false but designed to look plausible. There are many interesting examples when you play with GPT to learn about laws, norms or regulations. It does very well at first on generic or common questions but starts inventing subsections or paragraph to existing document while quoting them with (what is perceived as) authority. The same thing happens with my own biography: it is a mix of real jobs, articles, references, intermixed with books that I did not write (with interesting titles, though) and positions that I did not have (but could have had, considering my background).

This is why the “copilot” metaphor is useful : generative AI agents such as ChatGPT are great tools as co-pilots, but you need to “be the pilot”, that is, be capable of checking the validity and the relevance of the output. ChatGPT has proven to be a great tool for creative sessions, but when some innovation occurs, the pilot (you) is doing the innovation, not the machine. As pointed out in the “Stanford AI report”, generative AI is not the proper technique for scheduling or planning, nor is it a forecasting or simulation tools(with the exception of providing a synthesis of available forecasts or simulations that have been published earlier). This is precisely the value of Figure 1: to remind oneself that specific problems are better solved by specific AI techniques. Despite the media hype as LLMs being the “democratization of AI available for all”, I find it easier to see these tools as “artificial knowledge” rather than “intelligence”. If you have played with asking GPT4 to solve simple math problems, you were probably impressed but there is already more that LLMs at work, preprocessing through “chain of thoughts” prompt engineering adds an abstraction layer that is not a native feature of LLMs. We shall return to how generative AI will evolve through hybrid combination and API extensions in the next section. By using the “artificial knowledge” label, I see GPT4 as a huge body of compressed knowledge that may be queried with natural language.

In this blog post, I focus on the language capabilities brought by LLM, but generative AI is a much broader discipline since GPT (generative pre-trained transformer networks) can operate on many other inputs. Also, there are many other techniques, such as stable diffusion for images, to develop “generative capabilities”. As pointed out in the AI Report quoted in the introduction, multi-modal prompting is already here (as shown by GPT4). In a reciprocate manner, a LLM can transform words into words … but it can also transform words into many other things such as programming languages or scripts, 3D models of objects (hence the Satya Nadella quote). Besides the use of knowledge assistants to retrieve information, it is likely that no-code/low code (such as Microsoft Power Apps) will one of the key vector of generative AI introduction into companies in the years to come. The toolbox metaphor becomes truly relevant to see the various “deep learning components” that transform “embeddings” (compressed input) as “Lego bricks” in a truly multi-modal playground (video or image to text, text to image/video/model, model/signals to text/image, etc.). Last, we have not seen the end of the applicability of the transformer architecture to other streams of input. Some of the complex adaptative process optimization problems of digital manufacturing (operating in an optimal state space from the input of a large set of IOT sensors) are prime candidates for replacing the “statistical machine learning” techniques (cf. Figure 1) with transformer deep neural nets.

3. Hybrid AI and Systems of Systems

A key observation from the past 15 years is that state-of-the-art intelligent systems are hybrid systems that combine many different techniques, whether they are elementary components or the assembly of components with meta-heuristics (GAN, reinforcement learning, Monte-Carlo Tree Search, evolutionary agent communities, to give a few examples). Todai Robot is a good example of the former while DeepMind many successes are good examples of the later (I refer you to the excellent Hannah Fry podcast on DeepMind which I have often advertised in my blog post). DeepMind is constantly updating its reinforcement learning knowledge in a form of a composable agent. The aforementioned report from NATF gives other examples, such as the use of encoder/decoder architecture to recognize defects on manufacturing products while training on a large volume of pieces without defects (a useful use cases since pieces with defects are usually rare). The ability to combine, or to enrich, elementary AI techniques with others or with meta-heuristics is the reason for sticking with the “AI toolbox” metaphor. The subliminal message is not to specialize too much, but rather to develop skills with a larger set of techniques. The principle of “hybrid AI” generalizes to “system of systems”, where multiple components collaborate, using different forms of AI. Until we find a truly generic technique, this approach is a “best-of-breed” system engineering method to pick in the toolbox the best that each technique can bring. As noted in the NATF report, “System of systems” engineering is also a way to design certifiable AI if the “black box components” are controlled with (provable) “white box” ones.

ChatGPT is a hybrid system in many ways. The most obvious way is the combination of LLM and RLHF (reinforcement learning with human feedback). If you look closer at the multiples steps, many techniques are used to grow through reinforcement learning a reward system that is then uses to hybrid the LLM’s output. In a nutshell, once a first step of fine tuning is applied, reinforcement learning with human operators is used to grow a reward system (a meta-heuristic that the LLM-base chatbot can later use to select the most relevant answer). Open AI has worked for quite some time to develop various reinforcement learning such as PPO (Proximal Policy Optimization), and the successive versions of RLHF has grown to be large, sophisticated and hybrid in its own way, taking advantage of the large training set brought by the massive adoption (cf. Point #4 of Section 2). As explained by Sam Altman in its YouTube interview, there are many other optimizations that have been added to reach the performance level of GPT4, especially with Chain-Of-Thoughts extensions. It is quite different to think about LLM as a key component or as a new form of all-purpose AI. The title of this blog post is trying to make this point. First let me emphasize that “English Fluency” and “Knowledge compression” are huge breakthroughs in terms of capabilities. We will most certainly see multiple impressive consequences in the years to come, beyond the marvels of what GPT4 can do today. Thinking in terms of toolbox and capabilities mean that “English fluency” – that is both the capacity to understand questions in their natural language format and the capacity to restitute through well-formed and well-balanced English sentence – can be added to almost any computer tool (as we are about to see soon). English is just a language example here, although my own experience tells me that GPT is better with English than French. However, when you consider the benefits of being able to query any application in natural language versus following the planned user interface, one can see how “English fluency” (show me your data, explain this output, justify your reasoning ….) might become a user requirement for most applications of our information systems. On the other hand, recognizing that GPT is not a general-purpose AI engine (cf. the previous comment about planning, forecasting and the absence of world model other than the compression of experiences embedded into the training set) has led OpenAI to move pretty fast on opening GPT4 as a component, which is a sure way to promote hybridization.

The opening of GPT4 with inbound and outbound APIs is happening fast considering the youth of the Open AI software components. Inbound APIs help you to use GPT4 as a component to give dialog capabilities to your own system. Think of is as “prompt engineering as code”, that use using the expressive power of computer languages to probe GPT4 in the directions that suit your needs (and yes, that covers the “chain of thoughts” approach mentioned earlier, that is instructing GPT4 to solve a problem step by step, under the supervision of another algorithm – yours – to implement another kind of knowledge processing). Outbound APIs means to let ChatGPT call your own knowledge system to extend its reasoning capabilities or to have access to better form of information. Here the best example to look at is the combination of Wolfram Alpha with GPT. Another interesting example is the interplay between GPT and knowledge graphs. If you remember the toolbox of Figure 1, there is an interesting hybrid combination to explore, that of semantic tools (such as ontologies and knowledge graphs) with LLM capabilities from tools such as ChatGPT. Thus, a reason for selecting this post title, was to draw the attention of the reader on the fast-growing field of GPT APIs, versus thinking of GPT as a stand-alone conversational agent.

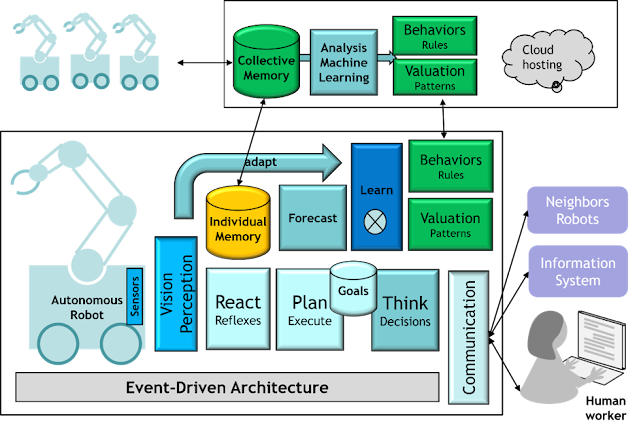

The idea that we need a system of systems approach to build “general purpose AI” (not AGI, “just” complex intelligent systems with a large range of adaptative behavior) is only a hypothesis, but one that seems to hold for the moment. I am reproducing below a figure that I have overused in my blog posts or talks, but that illustrate this point pretty well. The question is how to design a smart autonomous robot for a factory, that is able to learn on its own but also able to learn as a community, from similar robots deployed in the factory or similar factories. Community learning is something that Tesla cars are doing by sharing vehicle data so that experience grows much faster. Any smart robot would build on the neural net AI huge progress made a decade ago about perception and recently (this post’s topic) about natural language interaction. On the other hand, a robot needs to have a world model, to generate autonomous goals (from larger goals, by adapting to context) and then to plan and schedule. The robot (crudely) depicted in the picture illustrates the combination of many forms of AI represented in Figure 1. Security in a factory is a must; hence the system of systems is the choice framework to include “black box” components under the supervision of certifiable/explainable AI modules (from rules to statistical ML inference, there are many auditable techniques). Similarly, this figure illustrates the dual need for real-time “reflex” action and “long-term” learning (which can be distributed on the cloud, because latency and security requirements are less stringent).

Figure 2: Multiple AI bricks to build a smart robot community

4. AI Ecosystem Playbook

This last section is about “playing” the AI ecosystem, that is taking advantage of the constant flow of innovation in AI technology, while keeping one’s differentiation know-how safe. 2023, with the advent of the multiple versions and variants of GPT, makes this question/issue very acute. On the one hand, you cannot afford to miss what OpenAI and Microsoft (and Google, and many others) are bringing to the world. On the other hand, these capabilities are proposed “as a service”, and require a flow of information from your company to someone else’s cloud. The smarter you get with your usage, the more your context/prompts grow, the more you tell about yourself. I also want to emphasize that this section deals with only one (very salient but limited) aspects of protecting the company when using outside AI tools. For instance, when using GPT4 or GitHub copilot to generate code, the question of the IP status of the fragments “synthesized” from the “open source” training data is a tough one. Until we have (source) attribution as a new feature of generative AI, one has to be careful with the commercial use of “synthetic answers” (a large part of open-source code fragments requires the explicit mention of their provenance).

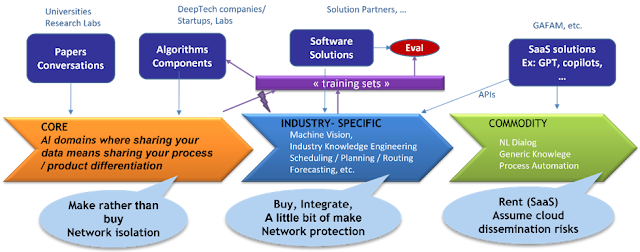

The following figure is a simplified abstraction of how we see the question of protecting our know-how at Michelin. It is based on recognizing three AI domain:

- “core” AI: when the algorithm reproduces a differentiation process of the company (this is obvious for manufacturing companies but is much more widely applicable). What defines “core AI” is that the flow of information (data or algorithm) can only be from outside to inside. In many cases, telling a partner (a research lab or a solution vendor) about your digital traces (from the machines, connected products or IOT-enriched processes) is enough to let others become experts in your own field with the benefit of your own experience that is embedded into your data. Deciding that a domain is “core” is likely to slow you down because it is a large burden on playing “the ecosystem game”, but it is sometimes wised to be later rather than being disrupted.

- “Industry AI” is what you do, together with your competitors, but is specific to your industry. This is where there is more to be gained to reuse solutions or techniques that have been developed by the outside ecosystem to solve problems that you share with others. Even though there are always aspects that are unique to each manufacturing, distribution, supply chain situation, the nature of the problems is common enough that “industry solutions” exist, and sharing your associated data is no longer a differentiation risk.

- “Commodity AI” represents the solutions for problems that are shared across all industries, activities that are generic, for instance for “knowledge workers”, and offer similar optimization and automation opportunities across the globe. Because of the economy of scale, “commodity AI” is by now mean lower quality: it is the opposite, commodity AI is developed by very large players (such as the GAFAM) on very large set of data and represent the state of the art of methods in Figure 1.

Figure 3: AI Ecosystem – Trade-off between differentiation and leverage

This distinction is a little bit rough, but it yields a framework about what to use and what not to use from the outside AI ecosystem. As shown in Figure 3, Core AI is where you make your solutions. It does not mean that you do not need to learn about the state of the art by reading the relevant literature or implementing some of the new algorithms, but the company is in charge of making its own domain specific AI. It also requires extra care from a network isolation and cybersecurity perspective because when your process/product know-how is embedded into an AI piece of software the risk of both IP theft and very significant cyber-attacks grows. Industry AI is the realm of integration of the “best of breed” solutions. The main task is to identify the best solutions which requires a large exchange of data and to integrate them, to build your own “systems of systems”. Customization to your needs often requires writing a little bit of code of your own, such as your own meta-heuristics, or your own data processing/filtering. These solutions also required to be protected from cybersecurity threats (for the same reasons, the more you digitize your manufacturing, the more exposed you are), but IP theft from data leaks is less of a problem (by construction). Industry AI is based on trust with your partners, so selecting them is critical. Commodity AI are solution that already existed before you considered them, they are often proposed as a service, and it is wise to use them while recognizing that your level of control and protection is much lower. This is the current ChatGPT situation: you cannot afford to miss the opportunity, but you must remember that your prompt data goes to enrich the cloud base and may be distributed – including to competitors – later on. Since commodity AI has the largest R&D engine in the world (tens of billions of dollars), it has to be a key part of your AI strategy, but learning to use “AI as a service” with data and API call threads that do not reveal to much is the associated skill that you must learn to develop.

Figure 3 also represents a key idea, that of “public training sets”, which we implemented at Bouygues 25 years ago. Training sets are derived from your most significant problems, using either data from “industry AI” problems that have been cleaned or, sometimes, data from “CORE AI” problems that have been significantly transformed so that the problem is still here, but the reverse engineering of your own IP is no longer possible. Training sets are used both internally to evaluate the solutions of outside vendors, but they can be shared to facilitate and accelerate the evaluation. As pointed out in the following conclusion, knowing how your internal solution and pieces of system stand against the state of the art is a must for any AI solutions. Curating “training set” (we used to call them “test sets” when the preferred optimization technique was OR algorithms, and moved to “training sets” with the advent of machine learning). It easy for technical teams to focus on delivering “code that works” but the purpose of the AI strategy is to deliver as much competitive value as possible. Training sets may be used to organize public hackathons, such as the ROADEF challenge or such as the famous competition that Netflix organized, more than a decade ago, on recommendation algorithms. Experience shows that learning to curate the training sets for your most relevant problems is a great practice – as any company who has submitted a problem to the ROADEF challenge knows. It forces communication between the teams and is more demanding than it sounds. Foremost, it embodies the attitude that open innovation (looking out for what others are doing) is better than the (in)famous “ivory tower” mentality.

5. Conclusion: Beware of Exponential Debt

The summary for this blog post is quite simple:

- Many breakthroughs have happened in the field of LLM and conversational agents. It is a transformative revolution you cannot afford to miss. Generative-AI-augmentation will make you more productive, provided that you keep being “the pilot”.

- Think of LLM as unique capabilities: language fluency and knowledge management. They work as standalone tools, but much more value is available if you think “systems of systems” and start playing with in/out APIs and extended contexts.

- You cannot afford to go alone, you must play the ecosystem, but find out how to benefit from the external solutions without losing the control of your internal knowledge.

I will not attempt to conclude with a synthesis about the state of AI that could be proven wrong in a few months. On the contrary, I will underline a fascinating consequence of the exponential rhythm of innovation: whichever piece of code you write, whichever algorithm you use, it becomes obsolete very fast since its competitive performance follows a reverse law of exponential decay. In a tribute to the reference book “Exponential Organizations”, I call this phenomenon exponential debt, which is a form of technical debt. The following figure (borrowed here) illustrates the dual concept of exponential growth and exponential decay. What exponential debt means is that, when AI capabilities grow at an exponential rate, any frozen piece of code has a relative performance (compared with the state of the art) that decays exponentially.

This remark is a nice way to loop back on the necessity to build exponential information systems, that are modular systems that can be changed constantly with “software as flows” processes. As pointed by Kai-Fu Lee, AI requires science and engineering, because AI is deployed as a “modality” of software. Scientific knowledge is easily shared, engineering requires experience and practice. Being aware of exponential debt is one thing, being able to deal with it requires great software engineering skills.

This is one of the key topics of my upcoming Masterclass on June 23rd.

4 comments:

Superbe article. Bravo car je suis aussi en faveur d'une hybridation des IA. Et pour aller plus loin pour permettre a des IAs de confiance dite societale d'encadrer les IA de confiance dans ce que j'appelle apporter de la gouvernabilite aux IA c'est dire surveiller qu'une ia ne déborde pas l'intention, surveiller aussi le non intentionnel et enfin cerise sur le gâteau trouver l'optimum entre des injonctions contradictoires.

Merci. Un billet très complet, presque trop. Je me suis un peu perdu dans les references et les renvois. Le theme du "knowledge compression" me fait un peu tiquer toutefois.

T

Thanks for sharing such a great information.. It is really helpful for me..I always search to read the quality content and finally i found this in your post. keep it up!

Fission nuclear power

excellent information

Post a Comment