1. Introduction

This blog post is a return to what I used to do when I started my blogs, that is sharing a few thoughts (“musing”) on a specific topic, as opposed to writing longer texts that are either full essays or book reviews. The topic of the day is the difficulty of keeping promises – such as meeting SLA: Service Level Agreements, defined as lead time – in a VUCA world, volatile, uncertain, complex and ambiguous. This difficulty stems both from the complexity of the object that is being promised (that is, knowing beforehand how much effort, time and resources will be necessary) and the complexity of the environment (all the concurrent pressures that make securing the necessary efforts and resources uncertain, volatile, and complex).

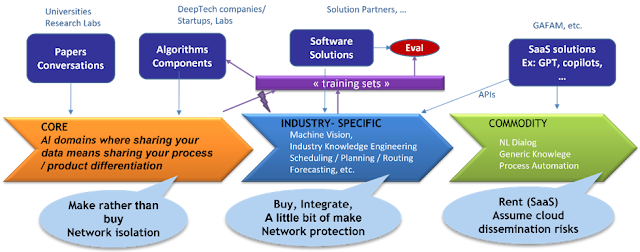

Professionally, as a software and IS executive for the past 30 years, I have lived first-hand the difficulty of many information systems projects to be delivered on-time. Moving to agile methodologies has helped our profession to recognize the VUCA difficulty, and to reduce the number of promises that could not be kept. However, the complex interdependency of projects in a company still requires orchestration roadmaps and project teams to agree and to meet on synchronization milestones. Therefore, a key skill in the digital world is to balance the necessity to manage emergence (some capacities are grown without a precise control on lead time) together with the control of continuous delivery.

The thesis that I will explore in this blog post is that, when both the environment and the nature of the object/product/service that is being promised becomes more complex (in the full VUCA sense), we need to reduce the number of such promises, make simpler-to-understand promises (which does not mean simpler-to-deliver) and, on the opposite, make promises that are strongly binding on your resources. The most important corollary of this list is that the first thing one needs to learn in a VUCA world is to say no. However, saying no all the time, or refusing to make any promises, is not a practical solution either, so the art of the VUCA promise is also a balancing act, grounded in humility and engagement.

This blog post is organized as follows. Section 2 looks at waiting queues, and how they are used everywhere as a demand management tools. We know this from the way our calls (or emails) are handled in call center, but this is also true of an agile backlog, which is equally managed as a (sorted) queue. The queue, such as the line queue in front of a popular pastry shop, is the oldest form of self-stabilizing demand management (you decide how long you are ready to wait based on the value you expect to get), but not necessarily the most efficient one. Section 3 looks at alternatives to manage demand in a VUCA contexts, from resource management such as Kanban to adaptative prioritization policies. I will look at different situations, including the famous “Beer Game”, that shows that resilience under a VUCA load is poorly served by complexity. Section 4 makes a deeper dive on the topic of “service classes” and how to handle them using adaptative policies. I will briefly present the approach of DDMRP (demand driven material requirement planning) as one of the leading approaches to “live with a dynamic adaptative supply chain”. There is no silver bullet, and the techniques to strengthen one’s promises should not be opposed but combined.

2. Waiting Queues as Demand Management

I have always been fascinated by the use of queues as demand management devices, it is the topic on my early 2007 blog post. Queues are used everywhere, in front of shops, museums, movie theaters because it is a simple and fair (Movie theaters have moved to pre-reservation using digital apps a decade ago). Fairness is important here, a lot of sociology work exists about how people react to queues, depending on their nationality and culture. It comes from the transparency of the process: even if you do not like it, you can see how you are managed in this process. This is the famous FCFS (first come, first serve) policy that has been a constant topic of attention: it is fair, but it is not efficient nor for the service provider nor for the provide. If you step back, the FCFS policy has two major shortcomings:

- In most cases, including healthcare practitioners, there are different priorities since the value associated with the upcoming schedule appointment varies considerably. In the case of practitioners, priority comes either from the urgency of the situation and the long-standing relationship with a patient.

- In many cases, a customer that is refused an appointment within an “acceptable” time window may decide never to return (which is why small businesses on the street, try to never turn down a customer). Whether this is important or not depends on the business context and the scarcity of the competing offer … obviously, this is not a big issue for ophthalmologists today. However, the problem becomes amplified by variability of the demand. If the demand is stable, the long queue is a self-adjusting mechanism to shave the excess demand. But in the case of high variability, “shaving the demand” creates oscillations, a problem that we shall meet again in the next section (due to the complex/non-linear nature of service with a queue).

Consequently, healthcare practitioners find ways to escape the FCFS policy:

- Segmenting their agenda into different zones (which is the heart of yield management), that is having time slots that are opened to FCFS through, for instance, Doctolib (which supports a fair exposure of the agenda used as a sorted backlog), and other time slots which are reserved for emergencies (either from a medical emergency perspective or a long-time customer relationship perspective)

- Forcing the agenda allocation to escape the FCFS, either by inserting patients “between appointments” or by shifting appointments (with or without notice). In all cases, this is a trade-off when the practitioner implicitly or explicitly misses the original promise, to maximize value.

The remainder of this post will discuss about these two options, zoning (reserving resources for class of services) and dynamic ordering policies. Both are a departure from fairness, but they aim to create value.

Unkept promises yield amplified demand, this is a well-know observation from many fields. In the world of call centers, when the waiting queue to get an agent gets too long, people drop the call and come back later. When trying to forecast the volume of calls that need to be handled, the first task of the data scientist is to reconstruct the original demand from the observed number of calls. This is also true for medical practitioners, when the available appointments are too far in the future, people tend to keep looking for other options, and drop their appointment later. This happens for restaurants or hotels as well, which is why you are now often charged a “reservation fee” to make sure that you are not edging your bets with multiple reservations. This is a well-known adaptive behavior: if you expect to be under-served, you tend to amplify your demand. This is why the title of this blogpost matters, “keeping your promises in a VUCA world”, because if you don’t, not only you degrade your customer satisfaction, but you promote an adaptative behavior that degrades even more your capacity to meet your promises in the future. This is not at all a theoretical concern, companies that let their customer place “future” orders know this firsthand, and it makes supply chain optimization even more complex. The additional complexity comes from the non-linear amplification: the more a customer is under-served, the more requests are “padded” with respect to the original need. If you manage to keep your promise, you avoid entering a phenomenon which is hard to analyze since customers react differently to this “second-guessing game”.

Trying to understand how your stakeholders with react to a complex situation and second-guess your behavior is a fascinating topic. In his great book, “The Social Atom”, March Buchanan recalls the social experiment of Richard Thaler, who proposed in 1997 a game to the readers of the Financial Times, which consists of guessing a number "that must be as close as possible to 2/3 of the average of the other players' entries." It is a wonderful illustration of bounded rationality and second-guessing what the others will do. If players are foolish, they answer 50 (the average between 0 and 100). If they think "one step ahead," they play 33. If they think hard, they answer 0 (the only fixed point of the thought process, or the unique solution to the equation X = 2/3 X). The verdict: the average entry was 18.9, and the winner chose 13. This is extremely interesting information when simulating actors or markets (allows calibration of a distribution between "fools" and "geniuses"). Thaler’s game gives insights into a very practical problem: how to leverage the collective intelligence of the salesforces to extract a forecast for the next year’s sales, while at the same time, a part of variable compensation is linked to reaching next year's goal?. This is an age-old problem, but which is made much more acute in a VUCA world. The more uncertain the market is, the more sales agents’ best interest is to protect their future gain by proposing a lower estimate than what they guess. This is precisely why game theory in general, and GTES in particular, is necessary to understand how multiple stakeholders reacts in a VUCA context.

This question of meeting your promises in a VUCA context is critical to modern software development. It is actually a common issue of software development, but the VUCA context of digital/modern software products makes it more acute (I refer you to my last book where I discuss why the digital transformation context makes software development more volatile and uncertain, mostly because of the central role of the user, together with the fast rate of change pushed by exponential technologies). Agile methods, with their backlogs of user stories, offer a way to adapt continuously. Before each sprint starts, the prioritization of the backlog is dynamic and is re-evaluated according to the evolution of the context (customer feedback, technology evolution, competitors’ moves, etc.). However, as soon as we look at large scale systems, some milestones have hard deadlines and should not be reshuffled dynamically. Not everything is “agile” : coordination with advertising campaign, other partners, other legacy systems require to agree on large-scale coordination (which is why large-scale agile methodologies such as SAFe introduced “PI planning” events). More than 15 years ago at Bouygues Telecom when developing software for set-top boxes, we introduced “clock tags” in some user stories in the backlog. Later, at AXA’s digital agency, we talked about the “backmap”, the illegitimate daughter of the roadmap and the backlog. To make this coexistence of “hard-kept promises” with a “dynamically sorted backlog” work, two principles are critically important:

- Do not put a clock stamp until feasibility has been proven. The only uncertainty that is acceptable in a “backmap” is the one about resources (how much will be necessary). This means that the design thinking phase that produce the user story card for the backlog must be augmented, when necessary, with a “technical” (there is an infinite number of reasons why feasibility is questionable) “POC”, that is a proof of concept, which can be light or really complex (depending on the issue at stake).

- Do not overuse this mechanism: 30% of capacity for timed milestones is a good ratio, 50% at worst. Agility with the rest of the backlog gives you the flexibility to adapt resources to meet milestones, but only up to a point. There is no “silver bullet” here: the ratio depends on (a) the level of uncertainty (b) your actual, versus theoretical, agility.

Agile backlog is not a silver bullet for another reason: it takes discipline and craftsmanship to manage and sort out the backlog, to balance between short-term goals such as value and customer satisfaction and longer-term goals such as cleaning the technical debt and growing the “situational potential” of the software product (capability to produce long-term future).

3. Demand-Adaptative Priorities versus Kanban

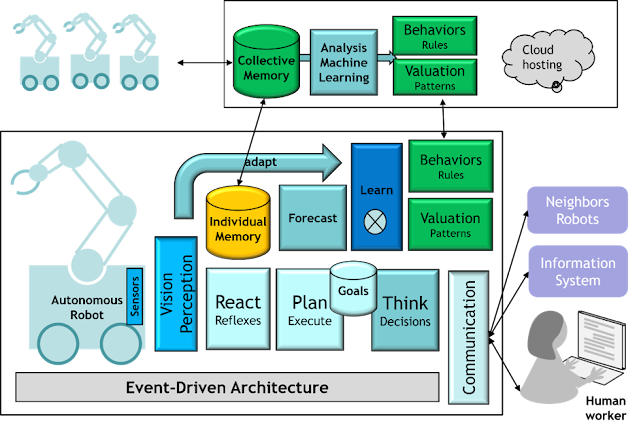

More than 15 years ago, I worked on the topic of self-adaptive middleware, whose purpose is to implement “service classes” among business processes. In the context of EDA (event-driven architecture), the middleware routes asynchronous messages from one component to another to execute business process. Each business process has a service level agreement, which describes, among other things, the expected lead time, that is the time it takes to complete the business process from end to end. Since there are different kinds of business processes with different priorities, related to different value creation, the goal of an “adaptive middleware” is to prioritize flows to maximize value when the load is volatile and uncertain. This is a topic of interest because queuing theory, especially the theory of Jackson networks, tells us that a pipe or a network of queues are variability amplifiers. A modern distributed information system with microservices can easily be seen as network of service nodes with handling queues. When observing a chain of queues submitted to a highly variable load, this variability can be amplified as it moves from one a station to another, something that is a direct consequence of the Pollaczek- Khinchine formula. I got interested with this topic after observing a few crisis, as a CIO, when the message routing infrastructure got massively congested.

I have written different articles on this work and I refer you to this 2007 presentation to find out more about policies and algorithms. Here I just want to point out three things:

- I tried to adapt scheduling and resource reservation since my background (at that time) was scheduling and resource allocation optimization. It was quite tempting to import some of the smart OR (operations research) algorithms from my previous decade of work into the middleware. The short story is that it works for a volatile situation (stochastic optimization, when the load distribution varies but the distributions are known) but it does not work in an uncertain world, when the distribution laws are unknown.

- The best approach is actually simpler, it based on routing policies. This is where the self-adaptive adjective comes from: declarative routing policies based on SLA do not make assumptions the incoming distribution and prove to be resilient, whereas future resource allocation works beautifully when the future is known but prove to fail to be resilient. Another interesting finding is that, in a crisis time, LCFS (last-come, first serve) is better than FCFS (which is precisely the issue of the ophthalmologist whose entire set of customers are unhappy because of the delays).

- The main learning is that tight systems are more resilient than loose ones. While playing with the IS/EDA simulator, I tried to compare two information systems. The first one uses asynchronous coupling and queues as a load absorber and has SLAs that are much larger than end-to-end lead times. It also has less computing resources allocated to the business resources, since the SLA supports a “little bit of waiting” in a processing queue. The second configuration (for the same business processes and the same components) is much tighter: SLAs are much shorter, and more resources are allocated so the end-to-end lead time includes much less waiting time. It turns out that the second system is much more resilient! Its performance (SLA satisfaction) shows “graceful degradation” when the load grows unexpectedly (large zone of linear behavior), while the first system is chaotic and shows exponential degradation. There should be no surprises for practitioners of lean management, since this is precisely a key lesson from lean (avoid buffers, streamline the flows, and work with just-in-time process management)

The topic of understanding how a supply chain reacts when the input signals become volatile and uncertain is both very old and quite famous. It has led to the creation of the “The Beer Game”, a great “serious game” that let players experiment with a supply chain setting. Quoting GPT, "The Beer Game" is an educational simulation that demonstrates supply chain dynamics. Originally developed at MIT in the 1960s, it's played by teams representing different stages of a beer supply chain: production, distribution, wholesale, and retail. The objective is to manage inventory and orders effectively across the supply chain. The game illustrates the challenges of supply chain management, such as delays, fluctuating demand, and the bullwhip effect, where small changes in consumer demand cause larger variations up the supply chain. It's a hands-on tool for understanding systems dynamics and supply chain management principles”. A lot has been written about this game, since it has been used to train, students as well as executive, for a long time. The game is very different according to the demand flow settings. It becomes really interesting when the volatility increases, since it almost always leads to shortages as well as overproduction. Here are the three main observations that I draw from this example (I was a happy participant during my MBA training 30+ years ago):

- Humans are poor at managing delays. This is a much more general law that applies to many more situation, from how we react to a cold/hot shower (there is a science about the piping length between the shower head and the faucet) to how we react to global warming – I talk about this often when dealing with complex systems. In the context of “The Beer Game”, it means that players react too late and over-react, leading to amplifying oscillations, from starvation to over-production.

- Humans have usually a hard time understanding the behavior of Jackson networks, that is a graph (here a simple chain) of queues. This is the very same point as the previous example. Because we do not understand, we react too late, and the oscillating cycle of over-correcting starts. This is what makes the game fun (for the observer) and memorable (for the participant).

- Seeing the big picture matters: communication is critical to handle variability. One usually plays in two stages: first the teams (who each handle one station of the supply chain) are separated and work in front of a computer (seeing requests and sending orders). The second step is when the teams are allowed to communicate.Communication helps dramatically, and the teams are able to stabilize the production flow according to the input demand variation.

Let me add that when I played the game, although I was quite fluent with Jackson networks and queueing theory at that time, and although I had read beforehand about the game, I was unable to help my team and we failed miserably to avoid oscillations, a lesson which I remember to this day.

I will conclude this section with the digital twin paradox: these systems, a supply chain network or an information system delivering business processes are easy to model and to simulate. Because of the systemic nature of the embedded queue network, simulations are very insightful. However, there are two major difficulties. First, the VUCA nature of the world makes it hard to characterize the incoming (demand) laws. It is not so hard to stress-test such a digital twin, but you must be aware of what you do not know (the “known unknowns”). Second, a model is a model, and the map is not the territory. This is reason why techniques such as process mining, which reconstruct the actual processes from observed traces (logs), are so important. I refer you to the book “Process Mining in Action”, edited by Lars Reinkemeyer. Optimizing the policies to better serve a network of processes is of lesser use if the processes of the real word are too different from the ones in the digital twin. This is especially true for large organizations and processes for Manufacturing or Order-to-Cash (see the examples from Siemens and BMW in the book). I am quoting here directly from the introduction: “ Especially in logistics, Process Mining has been acknowledged for supporting a more efficient and sustainable economy. Logistics experts have been able to increase supply chain efficiency by reducing transportation routes, optimization of inventories, and use insights as a perfect decision base for transport modal changes”.

4. Service Classes and Policies

Before working on how to maintain SLA with asynchronous distributed information systems, I have worked, over 20 years ago, on how to keep the target SLAs in a call center serving many types of customers with varying priorities and value creation opportunities. The goal was to implement different “service classes” associated with different SLAs (defined mostly as lead time, the sum of waiting time and handling time). I was collaborating with Contactual (a company sold to 8x8 in 2011) and my task was to design smart call routing algorithms – called SLR : Service Level Routing) that would optimize service class management in the context of call center routing. Service classes are a key characteristic of yield management or value pricing, that is, the ability to differentiate one’s promise according to the expected value of the interaction. This work led to a patent “Declarative ACD routing with service level optimization”, that you may browse if you are curious, here I will just summarize what is relevant to the topic of this blog post. Service Level Routing (SLR) is a proposed solution for routing calls in a contact center according to Service Level Agreements (SLAs). It dynamically adjusts the group of agents available for a queue based on current SLA satisfaction, ranking agents from less to more flexible. However, while SLR is effective in meeting SLA constraints, it can lead to reduced throughput as it prioritizes SLA compliance over overall efficiency. Declarative control, where the routing algorithm is governed solely by SLAs, is an ideal approach. Reactive Stochastic Planning (RSP) is a method for this, using a planner to create and regularly update a schedule that incorporates both existing and forecasted calls. This schedule guides a best-fit algorithm aimed at fulfilling the forecast. However, RSP tends to plan for a worst-case scenario that seldom happens, leading to potential misallocation of resources. The short summary of the SLR solution development is strikingly similar to the previous example of Section 3:

- Being (at the time) a world-class specialist of stochastic scheduling and resource allocation, I spent quite some time designing algorithms that would pre-allocate resources (here group of agents with the required skills) to groups of future incoming calls. It worked beautifully when the incoming calls followed the expected distribution and failed otherwise.

- I then looked at simpler “policy” (rule-based) algorithms and found that I could get performance results that were still close to what I got when the call distribution is known beforehand (volatile but not uncertain), without making such hypothesis (the algorithm continuously adapts to the incoming distribution and is, therefore, much more resilient).

- The art of the SLR is to balance between learning too much from the past and adapting quickly to a “new world”. I empirically rediscovered Taleb’s law: in the presence of true (VUCA) complexity, it is better to stick to simple formulas /methods/ algorithms to avoid the “black swans” of the unintended consequences of complexity.

- To reduce the variability, one needs to work on the flow (cf. the adaptive middleware example): “The need for flow is obvious in this framework since improved flow results from less variability”.

- Frequent reaction yields better adaptation (the heart of agility): “ This may seem counterintuitive for many planners and buyers, but the DDMRP approach forces as frequent ordering as possible for long lead time parts (until the minimum order quantity or an imposed order cycle becomes a constraining factor)”.

- Beware of global planning methods that bring “nervousness” (“This constant set of corrections brings us to another inherent trait of MRP called nervousness” : small changes in the input demand producing large changes in the output plan) – I have told enough of my war stories in this post, but this is exactly the issue that I worked on more than 30 years ago, when scheduling fleet of repair trucks for the US telcos at Bellcore.

- DDMRP is a hybrid approach that combines the lean tradition of demand-driven pull management with Kanban and the stochastic sizing of buffers (“The protection at the decoupling point is called a buffer. Buffers are the heart of a DDMRP system”): “What if both camps are right? What if in many environments today the traditional MRP approach is too complex, and the Lean approach is too simple?”

- However, stochastic sizing also needs frequent updates to gain resilience and adaptability: “ Yet we know that those assumptions are extremely short-lived, as conventional MRP is highly subject to nervousness (demand signal distortion and change) and supply continuity variability (delay accumulation)”.

The main idea from the book is that we need to distinguish volatility and uncertainty, and the hard part is adapting to uncertainty. One may add that all stochastic optimization methods tend to suffer from a classical weakness: they make assumptions on the statistical independence of many input stochastic variables and fare poorly when these variables are bound to a common root cause that we usually call a crisis. This applies to the 2008 subprime crises as well as so many other situations.

To end this blog post, I will return to the practical issue of filling one’s calendar in a VUCA world. This is an old favorite of mine, which I had already discussed in my second book (the English edition may be found here). The problem that I want to solve when managing my calendar is, without surprise, to maximize the expected value of what gets in while keeping the agility (flexibility of non-assigned time slot) to also maximize the hypothetical value of high-priority opportunities that would come later. It is easy the adapt the two principles that we have discussed in this blog post:

- Reservation is “zoning”, that is defining temporal zone that you reserve for some special types of activities. For many years, as a CIO, I have reserved the late hours of the day (6pm-8pm) to crisis management. This supports a 24h SLA when a crisis occurs, and the capability to keep following the crisis with a daily frequency. Zoning works, but at the expense of flexibility when many service classes are introduced (overfitting of the model). It is also at the expense of the global service level (as with any reservation policy). In addition to its capacity to optimize the value from your agenda, zoning has the additional benefit of team/organization synchronization: if your zoning rules are shared, there is a new level of efficiency that you gain (a topic not covered today but a key stake in my books and other blogposts).

- Dynamic routing means to assign different SLA according to the service class, that is to find an appointment in the near future for high value/priority jobs but to use a longer time horizon as the priority declines. The goal here is avoid the terrible short-coming of first-come first-serve, which yields inevitably to the “full agenda syndrome” (so easy when you are a CIO or an ophthalmologist). Dynamic routing is less effective than zoning, but it is adaptive, and works well (i.e., compared to doing nothing) even then the incoming rate of requests enters a completely different distribution.

As mentioned in the introduction, my current policy after many years of tinkering, is to apply both. Reservation should be used sparingly (with the constant reminder to yourself that you do not know the future) because it reduces agility, but it is very effective. The more the agenda is overbooked, the more reservations are required. Dynamic routing on the other hand, is what works best in uncertain times, when the overbooking is less acute. It blends fluidly with the art of saying no, since saying no is the special case of returning an infinite lead time for the next appointment. It is by no means a silver bullet, since we find here the weakness of all sorting methods (such as the agile backlog): assigning a future value to a meeting opportunity is an art.

5. Conclusion

I will conclude this blog post with three “laws” that one should keep in mind when managing promises, such as service level agreements, in a VUCA world:

- Forecasting is difficult in a VUCA world, a little bit because of volatility and uncertainty (by definition) but mostly because of complexity and non-linearity between causal factors. We could call this the “Silberzann law”, it becomes more important the longer time horizon you consider (as told in another blog post, the paradox of the modern world is both the increasing relevance of short-term forecasts because of data and algorithms and the increasing irrelevance of long-term forecasts.

- Complex and uncertain situations are best managed with simple formulas and simple policies, which we could call the “Taleb Law”. This is both of consequence of the mathematics behind stochastic processes (cf. the Pollaczek–Khinchine formula) and complex systems with feedback loops. Here, the rule is to be humble and beware of our own hubris as system designers.

- When trying to secure resources to deliver promises made in a VUCA context, beware of hard resource reservation and favor adaptative policies, while keeping the previous law in mind. Aim for “graceful degradation” and keep Lean “system thinking” in mind when designing your supply / procurement / orchestration processes.